Monetizing machine learning refers to transforming machine learning projects into profitable web applications. Monetizing an ML project involves many steps including problem understanding, ML model development, web application development, model integration to web application, serverless cloud deployment of the final web app and finally monetizing the application.

The idea behind monetizing machine learning project is simple. What we will do? We will build a simple fast SaaS application for project and monetize it.

Creating a Software as a Service (SaaS) is a good choice for its many benefits such as reduced costs, scalability, ease of management, etc.

To monetize, we can consider subscription based pricing, premium features, API access, advertising, custom service, etc.

Let’s understand how to transform a machine learning project into a web application and monetize it.

Understanding the Problems

Take a real-world problem and do research on whether we can solve the problem using machine learning. If yes, find out if it is feasible to implement the solution using all your resources.

Who will benefit from the ML solution − the final end users? Who is the end user of the final machine learning application? Understanding the users is very important when you are analyzing a real-world problem.

The problem falls under what type of task in the machine learning context. What types of models can be used to solve the problem? Whether the problem can be solved using regression, classification, or clustering models. A proper understanding of the problem will help you to find the answers of these questions.

What would be the business model? Whether web application of mobile application, API sale or combination of two or more?

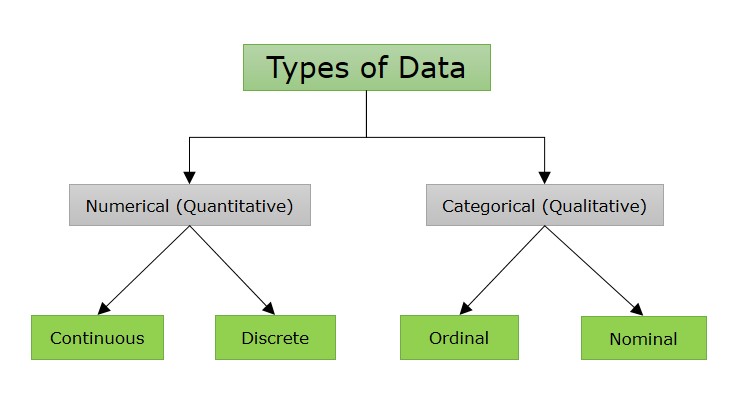

What type of data we have? Structured or unstructured. Analyze the data properly before going to solve the problem. It will help to decide what type of machine learning approach you should follow.

What computational resources you have? How to develop ML models? − on premise or cloud-based.

Understand the real world problem properly that you want to solve.

Defining the Solution

What will be the final solution of the problem?

Define the solution − how you will present the solution to the end user whether you will develop a web application, mobile app, API or a combination.

What is the business model?

Define your business model. What type of product for machine leaning model you want to create? One of the best solution is to create a software as a service (SaaS). You can consider for PaaS, AIaaS, Mobile Applications, API Service, and Selling ML APIs, etc.

Building a web application using serverless technology is a good choice to showcase your machine leaning application or solution. It is also easy to monetize your solution later on.

When you decide how you bring the solution to world, the next step is defining the core features of your machine learning solution. User interaction with the application, navigation, login, security, data privacy, etc., should be defined before diving into building the machine learning model.

Developing Machine Learning Model

The next step is to start developing your machine learning model. But before actually starting, you need to understand the machine learning models in detail. Without having a good knowledge of ML models you can’t be able to decide which model to select for your problem.

Understand Machine Learning Models

It is very important to understand different types of machine learning models and how to choose the right one for your project. Understanding the ML models will help select an appropriate model for your machine learning application.

Understanding that the underlined solution will fall under a particular machine learning task will help you decide on the proper model. Suppose your solution falls under the classification, then you have many choices of machine learning model. You can apply Naïve base, logistic regression, k-nearest neighbor, decision trees, and many more. So having a proper understanding of models is required before going to make your hands dirty with data and model training.

Types of ML Models

You should have a good understanding of the following types of machine learning models −

- Supervised − regression, classification,

- Unsupervised − clustering, dimensionality reduction

- Reinforcement − game theory, multi agent systems

- Neural Networks − recognition (image, speech), NLP

Select the right model

The most important step in building a machine learning model is to select the right one that solves your business problem. While selecting the right ML model, you should consider different factors such as −

- Data characteristics − consider the nature of data (structured, unstructured, time series data) to select a suitable model.

- Problem type − determine whether your problem is regression, classification or other task.

- Model complexity − determine the optimal model complexity to avoid the overfitting or under fitting.

- Computational resources − consider the computational resources to choose a complex or simple model.

- Desired outcome − consider it to perform the model evaluation.

Train Machine Learning Model

After selecting the right model for your machine learning problem, the next is to start building the actual machine learning model. There are different ways to build an ML model. The easiest way is to use a pre-trained model and custom train on your own datasets.

Pre-trained models − Pre-trained models are machine learning models that are trained with huge datasets. If your data is similar to the datasets on which the pre-trained models are trained, you can select them for your solution. In such cases, you need only to build a web or mobile application and deploy it on the cloud for worldwide users.

Fine-Tuning Pre-Trained Model − You can consider fine-tuning a pre-trained model on your custom datasets. You can fine-tune any publicly available model using machine learning libraries/ frameworks such as TensorFlow/ Keras, PyTorch, etc. You can also consider some online platforms such as AWS Sagemaker, Vertex AI, IBM Watson Studio, Azure Machine Learning, etc. for fine-tuning purposes.

Build from Scratch − You can consider building a machine learning model from scratch if you have all the required resources. It may take more time compared to the above two ways but may cost a little less.

Amazon SageMaker is a cloud-based machine-learning platform to create, train, evaluate, and deploy etc. machine-learning models on the cloud.

Evaluate Model

You have trained your ML model on your custom dataset. Now you have to evaluate the model on some new data to check whether the model is performing as per our desired outcomes or not.

For evaluating your machine learning model, you can calculate the metrics such as accuracy, precision, recall, f1 score, confusion matrix, etc. Based on these metrics, you can decide on a further course of action − finalizing the current model or going back with training again.

You can consider ensemble methods, combining multiple models (bagging and boosting) to improve model performance and reduce overfitting.

Deploy Demo Model online

Before building a full-fledged web application and deploying it on a cloud server, it is advised to deploy your machine learning model online. There are many free hosting providers where you can deploy your machine learning model and get feedback from the real time users. You can consider the following providers for this purpose −

- Hugging Face Space

- Streamlit Cloud

- Heroku

Creating Machine Learning Web Applications

As of now, you have developed your ML model and deployed the demo model online. Your model is working perfectly. Now you are ready to build a full-fledged machine learning web or mobile application.

You can consider the following technology stack to build web applications −

- Python frameworks – Flask, Django, FastAPI, etc.

- Web development (frontend) concepts − HTML, CSS, JavaScript

- Integrating machine learning models − how to integrate using APIs or libraries − Rest API

Deploying on the Serverless Cloud

Deploying your ML application on a serverless cloud will open doors to monetize your application. It will reach a worldwide audience. Choosing a cloud platform is a good idea to host your app. Going serverless can benefit you with reduced costs, scalability, ease of management, etc.

The following is a list of some well-known serverless cloud service providers best for your machine learning web applications −

- Google Cloud Platform − Google Cloud Functions

- Amazon Web Services − AWS Lambda, AWS Fargate, AWS Amplify Hosting

- Microsoft Azure − Microsoft Azure Functions

- Heroku

- Python Anywhere

- Cloudflare Workers

- Vercel Functions

You can use services like EC2 for computing power and S3 for storage.

Monetizing Your Machine Learning Applications

Now, your machine learning application is live on the cloud. You can promote, and market to your users. You can give them special offers to use your application.

Your machine learning application can reach to any corner of the world. When you get enough user, you can think about monetizing your application. There are different strategies to monetize ML web application including subscription model, pay-per-use pricing, advertising, premium features, etc.

- Subscription Model − Subscription-based pricing tiers (e.g., basic, premium, enterprise).

- Freemium Model − Offer a free version with limited features, and charge for advanced features.

- API Access − Charge businesses to access your AI tools via an API.

- Custom Solutions − Offer bespoke content generation services for larger clients.

- Advertising − you can also consider putting advertisement on your application but keep it in mind that advertisements will distort your application’s premium look.

Marketing and Sales

Marketing and sales are important to grow any business. Continuous marketing is required for a better sale of the product.

You can sell your Machine Learning application APIs on different online API marketplaces.

You can consider the following API Marketplaces −

- RapidAPI

- APILayer

- AWS Marketplace

- Infosys API Marketplace

- IBM API Connect

Monetizing machine learning has now become easy but more competitive. Monetizing the ML application needs a detailed market analysis before starting the building application. Each step of the machine learning software development needs deep research. Building a minimum viable product (MVP) and testing it before building a full-fledged web application is advisable.